Abstract

An evaluation measure of enablers and inhibitors to sustained evidence-based program (EBP) implementation may provide a useful tool to enhance organizations’ capacity. This paper outlines preliminary validation of such a measure. An expert informant and consumer feedback approach was used to tailor constructs from two existing measures assessing key domains associated with sustained implementation. Validity and reliability were evaluated for an inventory composed of five subscales: Program benefits, Program burden, Workplace support, Workplace cohesion, and Leadership style. Exploratory and confirmatory factor analysis with a sample of 593 Triple P—Positive Parenting Program—practitioners led to a 28-item scale with good reliability and good convergent, discriminant, and predictive validity. Practitioners sustaining implementation at least 3 years post-training were more likely to have supervision/peer support, reported higher levels of program benefit, workplace support, and positive leadership style, and lower program burden compared to practitioners who were non-sustainers.

Similar content being viewed by others

Notes

The CFI, RMSEA, and SRMR fit indices were all affected by the Satorra–Bentler scaling correction for the chi-square statistic.

The AVE estimate represents the average amount of variation that a latent construct is able to explain in the observed variables that theoretically relate to the construct. It is calculated by averaging the sum of squared factor loadings for each latent construct. The squared factor loading represents the amount of variation in each observed variable that the latent construct accounts for. When this variance is averaged across all observed variables that relate theoretically to the latent construct, the authors generate the AVE.

For the chi-square difference test, a constrained model, in which the correlation between the factors is fixed at 1.00, is compared to the original model’s χ 2 where the correlation between the constructs is estimated freely. Significantly lower chi-square value of the unconstrained model implies good discriminant validity.

The supervision/peer support variable was a binary predictor coded 0 if there was no supervision and peer support and 1 if the practitioner received some supervision/peer support. Since it was desirable to estimate the intercept and the slope for the group coded 0, no mean centering was applied for this predictor.103

FIML procedure is not available for logistic regression (FIML can only be used with maximum likelihood estimation).

The Bartlett’s test of sphericity is sensitive to deviations from normality, and, since the first sample was non-normally distributed, the results of this test should be interpreted with caution.

Results based on multiple imputations present a range of Cox and Snell R 2 across five imputed samples.

References

American Psychological Association. Effective Strategies to Support Positive Parenting in Community Health Centers. Washington, D.C.: Report of the Working Group on Child Maltreatment Prevention in Community Health Centers; 2009.

United Nations Office on Drugs and Crime. Compilation of evidence-based family skills training programmes. Vienna, Austria: United Nations; 2010.

World Health Organization. Violence Prevention: The evidence. Geneva, Switzerland: Departement of Violence and Injury Prevention and Disability; 2010.

Odgen T, Fixsen DL. Implementation science: A brief overview and a look ahead. Zeitschrift Für Psychologie. 2014;222(1):4–11.

LaPelle NR, Zapka J, Ockene JK. Sustainability of public health programs: The example of tobacco treatment services in Massachusetts. American Journal of Public Health. 2006;96(8):1363–1369.

Goodman RM, Steckler A. A model for the institutionalization of health promotion programs. Journal of Family and Community Health. 1989;11(4):63–78.

Gaven S, Schorer J. From training to practice transformation: Implementing a public health parenting program. Australian Institute of Family Studies. 2013(93):50–57.

World Health Organization. From Evidence to Policy: Expanding Access to Family Planning. Geneva, Switzerland: Department of Reproductive Health and Research; 2012.

Scheirer MA. Is sustainability possible? A review and commentary on empirical studies of program sustainability. American Journal of Evaluation. 2005;26(3):320–346.

Wiltsey Stirman S, Kimberly J, Cook N, et al. The sustainability of new programs and innovations: a review of the empirical literature and recommendations for future research. Implementation Science. 2012;7(1):17–36.

Fixsen D, Blase KA, Naoom SF, et al. Implementation Drivers: Assessing Best Practices. Chapel Hill, NC: Creative Commons License; 2015.

Durlak JA, Dupre EP. Implementation matters: A review of research on the influence of implementation on program outcomes and the factors affecting implementation. American Journal of Community Psychology. 2008;41(3–4):327–350.

Greenhalgh T, Robert G, Macfarlane F, et al. Diffusion of innovations in service organizations: Systematic review and recommendations. The Milbank Quarterly. 2004;82(4):581–629.

Scheirer MA, Dearing JW. An agenda for research on the sustainability of public health programs. American Journal of Public Health. 2011;101(11):2059–2067.

Aarons GA, Hurlburt M, McCue Horwitz S. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Journal of Administration and Policy in Mental Health. 2011;38(1):4–23.

Damschroder LJ, Aron DC, Keith RE, et al. Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implementation Science. 2009;4(1):50–65.

Meyers DC, Durlak JA, Wandersman A. The quality implementation framework: A synthesis of critical steps in the implementation process. American Journal of Community Psychology. 2012;50(3–4):462–480.

Sanders MR, Turner KMT, McWilliam J. The Triple P- Positive Parenting Program: A community wide approach to parenting and family support. In: MJ Van Ryzin, KL Kumpfer, GM Fosco, MT Greenberg (Eds). Family-Based Prevention Programs for Children and Adolescents: Theory, Research, and Large-scale Dissemination. New York: Psychology Press; 2015: pp. 130–155.

Marquez L, Holschneider S, Broughton E, et al. Improving Health Care: The Results and Legacy of the USAID Health Care Improvement Project. Bethesda, MD: University Research Co., LLC (URC);2014.

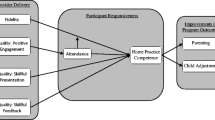

Hodge LM & Turner KMT. Sustained Implementation of Evidence-based Programs in Disadvantaged Communities: A Conceptual Framework of Supporting Factors 2015.

Rogers EM. Diffusion of Innovations. 5th ed. New York: Free Press; 2003.

Weiner BJ. A theory of organizational readiness for change. Implementation Science. 2009;4(1):67–76.

Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: The RE-AIM framework. American Journal of Public Health. 1999;89(9):1322–1327.

Fixsen D, Naoom SF, Blase KA, et al. Implementation Research: A Synthesis of the Literature. Tampa, FL: Louis de la Parte Florida Mental Health Institute; 2005.

Spoth R, Greenberg MT, Bierman K, et al. PROSPER community-university partnership model for public education systems: Capacity building for evidence-based, competence-building prevention. Prevention Science. 2004;5(1):31–39.

Simpson DD, Flynn PM. Moving innovations into treatment: A stage-based approach to program change. Journal of Substance Abuse Treatment. 2007;33(2):111–120.

Ilott I, Gerrish K, Laker S, et al. Bridging the gap between knowledge and practice: Using theories, models and conceptual frameworks. Getting Started: Naming and Framing the Problem. 2013;10(2):1–4.

Proctor EK, Landsverk J, Aarons GA, et al. Implementation research in mental health services: An emerging science with conceptual, methodological, and training challenges. Journal of Administration and Policy in Mental Health. 2009;36(1):24–34.

Spoth R, Guyll M, Redmond C, et al. Six-year sustainability of evidence-based intervention implementation quality by community-university partnerships: The PROSPER study. American Journal of Community Psychology. 2011;48(3–4):412–425.

Schell SF, Luke DA, Schooley MW, et al. Public health program capacity for sustainability: A new framework. Implementation Science. 2013;8(1):15–24.

Feldstein AC, Glasgow RE. A practical, robust implementation and sustainability model (PRISM) for integrating research findings into practice. Joint Commission Journal on Quality and Patient Safety. 2008;34(4):228–243.

Proctor EK, Silmere H, Raghavan R, et al. Outcomes for implementation research: Conceptual distinctions, measurement challenges, and research agenda. Journal of Administration and Policy in Mental Health. 2011;38(2).

Armstrong R, Waters E, Moore L, et al. Improving the reporting of public health intervention research: Advancing TREND and CONSORT. Journal of Public Health. 2008;30(1):103–109.

Southam-Gerow M, Arnold C, Rodriguez A, et al. Acting locally and globally: Dissemination implementation around the world and next door. Journal of Cognitive and Behavioral Practice. 2014;21:127–133.

Fixsen D, Blase K, Naoom S, et al. Stage-based measures of implementation components. National Implementation Research Network. 2010:1–43.

Brown LD, Feinberg ME, Greenberg MT. Measuring coalition functioning: Refining constructs through factor analysis. Health Education & Behavior. 2012;39(4):486–497.

Savaya R, Spiro SE. Predictors of sustainability of social programs. American Journal of Evaluation. 2012;33(1):26–43.

Aarons GA. Mental health provider attitudes toward adoption of evidence-based practice: The evidence-based practice attitude scale (EBPAS). Journal of Mental Health Services Research. 2004;6:61–67.

Bartholomew LK, Parcel GS, Kok G, et al. Diffusion of innovations theory (Eds). Planning Health Promotion Programs: An Intervention Mapping Approach, Third Edition. California Jossey-Bass; 2011:108–111.

Cook JM, Schnurr PP, Biyanova T, et al. Apples don't fall far from the tree: Influences on psychotherapists’ adoption and sustained use of new therapies. Psychiatric Services. 2009;60(5):671–676.

Cohen LH, Sargent MM, Sechrest LB. Use of psychotherapy research by professional psychologists. American Psychologist. 1986;41(2):198–206.

Reding MEJ, Chorpita BF, Lau AS, et al. Provider’ attitudes toward evidence-based practices: Is it just about providers, or do practices matter, too? Journal of Administration and Policy in Mental Health. 2014;41(6):767–776.

Shapiro CJ, Prinz RJ, Sanders MR. Sustaining use of an evidence-based parenting intervention: Practitioner perspectives. Journal of Child and Family Studies. 2014;24(6):1615–1624.

Glisson C. The organizational context of children's mental health services. Clinical Child and Family Psychology Review. 2002;5(4):233–253.

Beidas RS, Marcus S, Aarons GA, et al. Predictors of community therapists’ use of therapy techniques in a large public mental health system. Journal of American Medical Association Pediatrics. 2015;169(4):374–382.

Aarons GA, Green AE, Willging CE, et al. Mixed-method study of a conceptual model of evidence-based intervention sustainment across multiple public-sector service settings. Implementation Science. 2014;9(1):183.

Ehrhart MG, Aarons GA, Farahnak LR. Assessing the organizational context for EBP implementation: The development and validity testing of the Implementation Climate Scale (ICS). Implementation Science. 2014;9(1):157–168.

Jacobs S, Weiner B, Bunger A. Context matters: Measuring implementation climate among individuals and groups. Implementation Science. 2014;9(1):46–60.

Aarons GA, Sommerfeld DH, Walrath-Greene CM. Evidence-based practice implementation: The impact of public versus private sector organization type on organizational support, provider attitudes, and adoption of evidence-based practice. Implementation Science. 2009;4(1):83–83.

Gruen RL, Elliott JH, Nolan ML, et al. Sustainability science: An integrated approach for health-programme planning. The Lancet. 2008;372(9649):1579–1589.

Simpson DD. A conceptual framework for transferring research to practice. Journal of Substance Abuse Treatment. 2002;22(4):171–182.

Lehman WEK, Greener JM, Simpson DD. Assessing organizational readiness for change. Journal of Substance Abuse Treatment. 2002;22(4):197–209.

Edmondson AC. Speaking up in the operating room: How team leaders promote learning in interdisciplinary action teams. Journal of Management Studies. 2003;40(6):1419–1452.

Mancini JA, Marek LI. Sustaining community-based programs for families: Conceptualization and measurement. Family Relations. 2004;53(4):339–347.

Schneider B, Ehrhart MG, Mayer DM, et al. Understanding organization-customer links in service settings. Academy of Management Journal. 2005;48(6):1017–1032.

Van de Ven A, Douglas P, Garud R, et al. The Innovation Journey. New York, NY: Oxford University Press; 1999.

Spence SH, Jill W, Kavanagh D, et al. Clinical supervision in four mental health professions: A review of the evidence. Behaviour Change. 2001;18(3):135–155.

Marquez L, Kean L. Making supervision supportive and sustainable: New approaches to old problems. Maxamizing Access and Quality Initiative. 2002(4).

Turner KMT, Sanders MR. Dissemination of evidence-based parenting and family support strategies: Learning from the Triple P – Positive Parenting Program system approach. Aggression and Violent Behavior, A Review Journal. 2006;11(2):176–193.

Sanders MR, Turner KMT. Reflections on the challenges of effective dissemination of behavioural family intervention: Our experience with the Triple P – Positive Parenting Program. Journal of Child and Adolescent Mental Health. 2005;10(4):158–169.

Pallas SW, Minhas D, Pérez-Escamilla R, et al. Community health workers in low- and middle-income countries: What do we know about scaling up and sustainability. American Journal of Public Health. 2013;103(7):e74-e82.

Beidas RS, Kendall PC. Training therapists in evidence-based practice: A critical review of studies from a systems-contextual perspective. Journal of Clinical Psychology: Science and Practice. 2010;17(1):1–30.

Chaudoir SR, Dugan AG, Barr CH. Measuring factors affecting implementation of health innovations: A systematic review of structural, organizational, provider, patient, and innovation level measures. Implementation Science. 2013;8(22).

Weiner BJ, Amick H, Lee SD. Conceptualization and measurement of organizational readiness for change: A review of the literature in health services research and other fields. Journal of Medical Care Research and Review. 2008;65(4):379–436.

Emmons KM, Weiner B, Fernandez Eulalia M, et al. Systems antecedents for dissemination and implementation: A review and analysis of measures. Health Education and Behavior. 2012;39:87–105.

Lewis CC, Stanick CF, Martinez RG, et al. The society for implementation research collaboration instrument review project: A methodology to promote rigorous evaluation. Implementation Science. 2015;10(2).

Brehaut JC, Graham ID, Wood TJ, et al. Measuring acceptability of clinical decision rules: validation of the Ottawa acceptability of decision rules instrument (OADRI) in four countries. Medical Decision Making. 2010;30(3):398–408.

Bolman C, De Vries H, Mesters I. Factors determining cardiac nurses’ intentions to continue using a smoking cessation protocol. Heart & Lung: The Journal of Acute and Critical Care. 2002;31(1):15–24.

Whittingham K, Sofronoff K, Sheffield JK. Stepping Stones Triple P: A pilot study to evaluate acceptability of the program by parents of a child diagnosed with an Autism Spectrum Disorder. Research in Developmental Disabilities. 2006;27:364–380.

Steckler A, Goodman RM, McLeroy KR, et al. Measuring the diffusion of innovative health promotion programs. American Journal of Health Promotion. 1992;6(3):214–224.

Trent LR. Development of a Measure of Disseminability (MOD) [Dissertation]: Psychology, University of Mississippi; 2010.

Maher L, Gustafson D, Evans A. Sustainability model and guide. NHS Institute for Innovation and Improvement. 2007. Available online at http://www.institute.nhs.uk/sustainability_model/general/welcome_to_sustainability.html. Accessed on February 17, 2016.

Cohen RJ, Swerdlik M, Sturman E. Psychological Testing and Assessment: An Introduction to Tests and Measurement. 8th ed. Boston, MA: McGraw-Hill Higher Education; 2013.

Klein KJ, Conn AB, Sorra JS. Implementing computerized technology: An organizational analysis. Journal of Applied Psychology. 2001;86(5):811–824.

Sanders MR. Development, evaluation, and multinational dissemination of the Triple P-Positive Parenting Program. Annual Review of Clinical Psychology. 2012;8:345–379.

Sanders MR, Kirby JN, Tellegen CL, et al. The Triple P-Positive Parenting Program: A systematic review and meta-analysis of a multi-level system of parenting support. Clinical Psychology Review. 2014;34(4):337–357.

Turner KMT, Nicholson JM, Sanders MR. The role of practitioner self-efficacy, training, program and workplace factors on the implementation of an evidence-based parenting intervention in primary care. Journal of Primary Prevention. 2011;32(2):95–112.

Turner KMT, Sanders MR, Hodge L. Issues in professional training to implement evidence-based parenting programs: The preferences of Indigenous practitioners. Australian Psychologist. 2014;49(6):384–394.

Turner KMT, Markie-Dadds C, Sanders MR. Facilitator’s manual for Group Triple P (3rd ed.). Brisbane, QLD, Australia: Triple P International; 2010.

Turner KMT, Sanders MR, Markie-Dadds C. Practitioner's manual for Primary Care Triple P (2nd ed.). Brisbane, QLD, Australia: Triple P International; 2010.

Feinberg ME, Chilenski SM, Greenberg MT, et al. Community and team member factors that influence the operations phase of local prevention teams: The PROSPER project. Prevention Science. 2007;8(3):214–226.

Shapiro CJ, Prinz RJ, Sanders MR. Facilitators and barriers to implementation of an evidence-based parenting intervention to prevent child maltreatment: The Triple P - Positive Parenting Program. Journal of Child Maltreatment. 2012;17(1):86–95.

Muthén LK, Muthen BO. Mplus user's guide. 7th ed. Los Angeles, CA: Muthén & Muthén 1998–2012.

Schmidt TA. Current Methodological Considerations in Exploratory and Confirmatory Analysis. Journal for Psychoeducational Assessment. 2011;29:304–321.

Stevens JP. Applied multivariate statistics for the social sciences. 4th ed. New York, NY: Taylor & Francis Group; 2002.

Satorra A, Bentler PM. Corrections to test statistics and standard errors in covariance structure analysis. In: von Eye A, Clogg CC, (Eds). Latent variables analysis: Applications for Developmental Research. Thousand Oaks, CA: Sage; 1994.

Browne MW, Cudeck R. Single sample cross-validation indexes for covariance-structrures. Multivariate Behavioral Research. 1989;24(4):445–455.

Hu LT, Bentler PM. Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling - A Multidisciplinary Journal. 2009;6(1):1–55.

Churchill, Jr GA. A paradigm for developing better measures of marketing constructs. Journal of Marketing Research. 1979;16(1):64–73.

Gerbing DW, Anderson JC. An updated paradigm for scale development incorporating unidimensionality and its assessment. Journal of Marketing Research. 1988;25(2):186–192.

Fornell C, Larcker DF. Evaluating structural equation models with unobservable variables and measurement error. Journal of Marketing Research. 1981;18(1):39–50.

Bollen KA. Structural Equations with Latent Variables. New York, NY: Wiley; 1989.

Afshartous D, Perston RA. Key results of interaction models with centering. Journal of Statistics Education. 2011;19:1–23.

De Vaus DA. Analyzing Social Science Data. London, England: SAGE; 2002.

Bentler PM. EQS 6 Structural Equations Program Manual. Encino, CA: Multivariate Software, Inc.; 2006.

Enders CK. Applied Missing Data Analysis: Methodology in Social Sciences. New York, NY: Guilford Press; 2010.

Van Prooijen J-W, Van der Kloot WA. Confirmatory analysis of exploratively obtained factor structures. Journal of Educational and Psychological Measurement. 2001;61(5):777–792.

Child D. The Essentials of Factor Analysis, Second Edition. London: Cassel Educational Limited; 1990.

Jasuja GK, Chou C-P, Bernstein K, et al. Using structural characteristics of community coalitions to predict progress in adopting evidence-based prevention programs. Journal of Evaluation and Program Planning. 2005;28:173–184.

Grol R, Grimshaw J. From best evidence to best practice: Effective implementation of change in patients’ care. Lancet. 2003;362:1225–1230.

Pluye P, Potvin L, Denis J, et al. Program sustainability begins with the first events. Evaluation and Program Planning. 2005;28(2):123–137.

Langley A, JL. D. Beyond evidence: the micropolitics of improvement. British Medical Journal. 2011;20(Supp 1):i43-i46.

Aiken LS, West SG, Reno RR. Multiple regression: Testing and interpreting interactions. Newbury Park, CA: Sage Publications; 1991.

Rubin DB. Multiple Imputation from Nonresponse Survey. New York, NY: Wiley, 1987.

Acknowledgments

This research was conducted with funding support from the Australian Research Council (ARC Linkage grant LP110200701: Assessing the effectiveness, acceptability, and sustainability of a culturally adapted evidence-based intervention for Indigenous parents). Many thanks go to the practitioners who completed the survey and to Jenna McWilliam and TPI for arranging the survey distribution.

Author Contributions and Disclosures

The Triple P—Positive Parenting Program—is developed and owned by The University of Queensland. The University, through its main technology transfer company, UniQuest Pty Ltd, has licensed Triple P International (TPI) Pty Ltd to publish and disseminate Triple P worldwide. Royalties stemming from published Triple P resources are distributed to the Faculty of Health and Behavioural Sciences; School of Psychology; Parenting and Family Support Centre; and contributory authors. TPI is a private company, and no author has any share or ownership in it. TPI had no involvement in the study design, collection, analysis or interpretation of data, or writing of this report. Matthew Sanders and Karen Turner are contributory authors and receive royalties from TPI. TPI engages the services of Matthew Sanders and Lauren Hodge as consultants to support program integrity and dissemination. Project design and data collection was managed by Lauren Hodge as part of her doctoral research. Data analysis and interpretation was led by Ania Filus. All authors approved the final manuscript.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A

Appendix B

Rights and permissions

About this article

Cite this article

Hodge, L.M., Turner, K.M.T., Sanders, M.R. et al. Sustained Implementation Support Scale: Validation of a Measure of Program Characteristics and Workplace Functioning for Sustained Program Implementation. J Behav Health Serv Res 44, 442–464 (2017). https://doi.org/10.1007/s11414-016-9505-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11414-016-9505-z