Automated Workflow for High-Resolution 4D Vegetation Monitoring Using Stereo Vision

Abstract

:1. Introduction

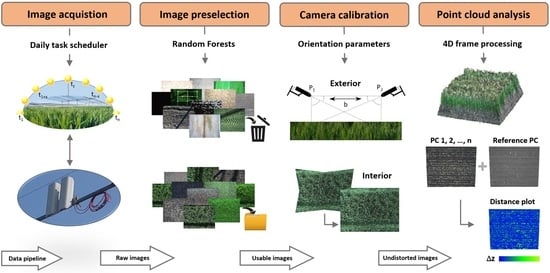

2. Experimental Setup

2.1. Field Site: GCEF Bad Lauchstädt

2.2. Stereo Camera Setup

2.3. Image Acquisition

2.4. Measurement of Reference Data

3. Data Processing

3.1. Image Preselection

3.2. Camera Calibration

3.3. Time-Lapse Stereo Reconstruction

4. Results

4.1. Image Classification Using Machine Learning

4.2. 3D Point Clouds: Reconstruction, Evaluation, Distances

5. Discussion

6. Conclusions and Outlook

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mulla, D.J. Twenty five years of remote sensing in precision agriculture: Key advances and remaining knowledge gaps. Biosyst. Eng. 2013, 114, 358–371. [Google Scholar] [CrossRef]

- Meier, U. Growth Stages of Mono- and Dicotyledonous Plants: BBCH Monograph; Open Agrar Repositorium: Quedlinburg, Germany, 2018; p. 204. [Google Scholar] [CrossRef]

- Morison, J.I.; Morecroft, M.D. Plant Growth and Climate Change; John Wiley & Sons: Hoboken, NJ, USA, 2008. [Google Scholar]

- Vázquez-Arellano, M.; Griepentrog, H.W.; Reiser, D.; Paraforos, D.S. 3-D imaging systems for agricultural applications-—A review. Sensors 2016, 16, 618. [Google Scholar] [CrossRef]

- Li, L.; Zhang, Q.; Huang, D. A review of imaging techniques for plant phenotyping. Sensors 2014, 14, 20078–20111. [Google Scholar] [CrossRef] [PubMed]

- Wakchaure, M.; Patle, B.; Mahindrakar, A. Application of AI Techniques and Robotics in Agriculture: A Review. Artif. Intell. Life Sci. 2023, 3, 100057. [Google Scholar] [CrossRef]

- Kolhar, S.; Jagtap, J. Plant trait estimation and classification studies in plant phenotyping using machine vision–A review. Inf. Process. Agric. 2021, 10, 114–135. [Google Scholar] [CrossRef]

- Li, Z.; Guo, R.; Li, M.; Chen, Y.; Li, G. A review of computer vision technologies for plant phenotyping. Comput. Electron. Agric. 2020, 176, 105672. [Google Scholar] [CrossRef]

- Blanquart, J.E.; Sirignano, E.; Lenaerts, B.; Saeys, W. Online crop height and density estimation in grain fields using LiDAR. Biosyst. Eng. 2020, 198, 1–14. [Google Scholar] [CrossRef]

- Schirrmann, M.; Hamdorf, A.; Garz, A.; Ustyuzhanin, A.; Dammer, K.H. Estimating wheat biomass by combining image clustering with crop height. Comput. Electron. Agric. 2016, 121, 374–384. [Google Scholar] [CrossRef]

- Ji, Y.; Chen, Z.; Cheng, Q.; Liu, R.; Li, M.; Yan, X.; Li, G.; Wang, D.; Fu, L.; Ma, Y.; et al. Estimation of plant height and yield based on UAV imagery in faba bean (Vicia faba L.). Plant Methods 2022, 18, 1–13. [Google Scholar] [CrossRef]

- Volpato, L.; Pinto, F.; González-Pérez, L.; Thompson, I.G.; Borém, A.; Reynolds, M.; Gérard, B.; Molero, G.; Rodrigues, F.A., Jr. High throughput field phenotyping for plant height using UAV-based RGB imagery in wheat breeding lines: Feasibility and validation. Front. Plant Sci. 2021, 12, 591587. [Google Scholar] [CrossRef]

- Kröhnert, M.; Anderson, R.; Bumberger, J.; Dietrich, P.; Harpole, W.S.; Maas, H.G. Watching grass grow-a pilot study on the suitability of photogrammetric techniques for quantifying change in aboveground biomass in grassland experiments. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci 2018, 42, 539–542. [Google Scholar] [CrossRef]

- Rueda-Ayala, V.P.; Peña, J.M.; Höglind, M.; Bengochea-Guevara, J.M.; Andújar, D. Comparing UAV-based technologies and RGB-D reconstruction methods for plant height and biomass monitoring on grass ley. Sensors 2019, 19, 535. [Google Scholar] [CrossRef] [PubMed]

- Vit, A.; Shani, G. Comparing RGB-D sensors for close range outdoor agricultural phenotyping. Sensors 2018, 18, 4413. [Google Scholar] [CrossRef]

- Jimenez-Berni, J.A.; Deery, D.M.; Rozas-Larraondo, P.; Condon, A.T.G.; Rebetzke, G.J.; James, R.A.; Bovill, W.D.; Furbank, R.T.; Sirault, X.R. High throughput determination of plant height, ground cover, and above-ground biomass in wheat with LiDAR. Front. Plant Sci. 2018, 9, 237. [Google Scholar] [CrossRef]

- Wang, X.; Singh, D.; Marla, S.; Morris, G.; Poland, J. Field-based high-throughput phenotyping of plant height in sorghum using different sensing technologies. Plant Methods 2018, 14, 1–16. [Google Scholar] [CrossRef]

- Bernotas, G.; Scorza, L.C.; Hansen, M.F.; Hales, I.J.; Halliday, K.J.; Smith, L.N.; Smith, M.L.; McCormick, A.J. A photometric stereo-based 3D imaging system using computer vision and deep learning for tracking plant growth. GigaScience 2019, 8, giz056. [Google Scholar] [CrossRef]

- Tilneac, M.; Dolga, V.; Grigorescu, S.; Bitea, M. 3D stereo vision measurements for weed-crop discrimination. Elektron. Elektrotechnika 2012, 123, 9–12. [Google Scholar] [CrossRef]

- Dandrifosse, S.; Bouvry, A.; Leemans, V.; Dumont, B.; Mercatoris, B. Imaging wheat canopy through stereo vision: Overcoming the challenges of the laboratory to field transition for morphological features extraction. Front. Plant Sci. 2020, 11, 96. [Google Scholar] [CrossRef]

- Wen, J.; Yin, Y.; Zhang, Y.; Pan, Z.; Fan, Y. Detection of Wheat Lodging by Binocular Cameras during Harvesting Operation. Agriculture 2022, 13, 120. [Google Scholar] [CrossRef]

- Bao, Y.; Tang, L. Field-based robotic phenotyping for sorghum biomass yield component traits characterization using stereo vision. IFAC-PapersOnLine 2016, 49, 265–270. [Google Scholar] [CrossRef]

- Eltner, A.; Hoffmeister, D.; Kaiser, A.; Karrasch, P.; Klingbeil, L.; Stöcker, C.; Rovere, A. UAVs for the Environmental Sciences: Methods and Applications; WBG Academic in Wissenschaftliche Buchgesellschaft (WBG): Darmstadt, Germany, 2022; Chapter 1.5.1.2. [Google Scholar]

- Tanaka, Y.; Watanabe, T.; Katsura, K.; Tsujimoto, Y.; Takai, T.; Tanaka, T.S.T.; Kawamura, K.; Saito, H.; Homma, K.; Mairoua, S.G.; et al. Deep learning enables instant and versatile estimation of rice yield using ground-based RGB images. Plant Phenomics 2023, 5, 0073. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, C.; Yang, C.; Xie, T.; Jiang, Z.; Hu, T.; Luo, Z.; Zhou, G.; Xie, J. Assessing the effect of real spatial resolution of in situ UAV multispectral images on seedling rapeseed growth monitoring. Remote Sens. 2020, 12, 1207. [Google Scholar] [CrossRef]

- Zaji, A.; Liu, Z.; Xiao, G.; Bhowmik, P.; Sangha, J.S.; Ruan, Y. Wheat Spikes Height Estimation Using Stereo Cameras. IEEE Trans. Agrifood Electron. 2023, 1, 15–28. [Google Scholar] [CrossRef]

- Cai, J.; Kumar, P.; Chopin, J.; Miklavcic, S.J. Land-based crop phenotyping by image analysis: Accurate estimation of canopy height distributions using stereo images. PLoS ONE 2018, 13, e0196671. [Google Scholar] [CrossRef]

- Brocks, S.; Bareth, G. Estimating barley biomass with crop surface models from oblique RGB imagery. Remote Sens. 2018, 10, 268. [Google Scholar] [CrossRef]

- Schima, R.; Mollenhauer, H.; Grenzdörffer, G.; Merbach, I.; Lausch, A.; Dietrich, P.; Bumberger, J. Imagine all the plants: Evaluation of a light-field camera for on-site crop growth monitoring. Remote Sens. 2016, 8, 823. [Google Scholar] [CrossRef]

- Schädler, M.; Buscot, F.; Klotz, S.; Reitz, T.; Durka, W.; Bumberger, J.; Merbach, I.; Michalski, S.G.; Kirsch, K.; Remmler, P.; et al. Investigating the consequences of climate change under different land-use regimes: A novel experimental infrastructure. Ecosphere 2019, 10, e02635. [Google Scholar] [CrossRef]

- Ballot, R.; Guilpart, N.; Jeuffroy, M.-H. The first map of crop sequence types in Europe over 2012–2018. Earth Syst. Sci. Data 2023, 15, 5651–5666. [Google Scholar] [CrossRef]

- Allied Vision Technologies GmbH. Mako Technical Manual. 2023. Available online: https://cdn.alliedvision.com/fileadmin/content/documents/products/cameras/Mako/techman/Mako_TechMan_en.pdf (accessed on 18 January 2024).

- EXTRA Computer GmbH. Pokini i2 Data Sheet. 2023. Available online: https://os.extracomputer.de/b10256/devpokini/wp-content/uploads/2020/07/Pokini-I2_Datenblatt_DE_V1-2_02-2020_web.pdf (accessed on 18 January 2024).

- Allied Vision Technologies GmbH. About. 2023. Available online: https://www.alliedvision.com/ (accessed on 18 January 2024).

- Proulx, R. On the general relationship between plant height and aboveground biomass of vegetation stands in contrasted ecosystems. PLoS ONE 2021, 16, e0252080. [Google Scholar] [CrossRef] [PubMed]

- Walter, J.D.; Edwards, J.; McDonald, G.; Kuchel, H. Estimating biomass and canopy height with LiDAR for field crop breeding. Front. Plant Sci. 2019, 10, 1145. [Google Scholar] [CrossRef]

- Van der Walt, S.; Schönberger, J.L.; Nunez-Iglesias, J.; Boulogne, F.; Warner, J.D.; Yager, N.; Gouillart, E.; Yu, T. scikit-image: Image processing in Python. PeerJ 2014, 2, e453. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Bradski, G. The openCV library. Dr. Dobb’s J. Softw. Tools Prof. Program. 2000, 25, 120–123. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. 2011, 12, 2825–2830. [Google Scholar]

- Brown, D.C. Close-range camera calibration. Photogramm. Eng 1971, 37, 855–866. [Google Scholar]

- Godding, R. Camera Calibration. In Handbook of Machine and Computer Vision; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2017. [Google Scholar] [CrossRef]

- Agisoft Helpdesk Portal. 4D Processing. 2022. Available online: https://agisoft.freshdesk.com/support/solutions/articles/31000155179-4d-processing (accessed on 18 January 2024).

- James, M.R.; Antoniazza, G.; Robson, S.; Lane, S.N. Mitigating systematic error in topographic models for geomorphic change detection: Accuracy, precision and considerations beyond off-nadir imagery. Earth Surf. Process. Landf. 2020, 45, 2251–2271. [Google Scholar] [CrossRef]

- James, M.R.; Robson, S.; Smith, M.W. 3-D uncertainty-based topographic change detection with structure-from-motion photogrammetry: Precision maps for ground control and directly georeferenced surveys. Earth Surf. Process. Landf. 2017, 42, 1769–1788. [Google Scholar] [CrossRef]

- Girardeau-Montaut, D. CloudCompare. Fr. EDF R&D Telecom ParisTech 2016, 11, 5. [Google Scholar]

- Huttenlocher, D.P.; Klanderman, G.A.; Rucklidge, W.J. Comparing images using the Hausdorff distance. IEEE Trans. Pattern Anal. Mach. Intell. 1993, 15, 850–863. [Google Scholar] [CrossRef]

- Millard, K.; Richardson, M. On the importance of training data sample selection in random forest image classification: A case study in peatland ecosystem mapping. Remote Sens. 2015, 7, 8489–8515. [Google Scholar] [CrossRef]

- Sheykhmousa, M.; Mahdianpari, M.; Ghanbari, H.; Mohammadimanesh, F.; Ghamisi, P.; Homayouni, S. Support Vector Machine Versus Random Forest for Remote Sensing Image Classification: A Meta-Analysis and Systematic Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 6308–6325. [Google Scholar] [CrossRef]

- Peña, J.M.; Gutiérrez, P.A.; Hervás-Martínez, C.; Six, J.; Plant, R.E.; López-Granados, F. Object-based image classification of summer crops with machine learning methods. Remote Sens. 2014, 6, 5019–5041. [Google Scholar] [CrossRef]

- Kumar, S.; Kaur, R. Plant disease detection using image processing—A review. Int. J. Comput. Appl. 2015, 124. [Google Scholar] [CrossRef]

- Zhou, C.L.; Ge, L.M.; Guo, Y.B.; Zhou, D.M.; Cun, Y.P. A comprehensive comparison on current deep learning approaches for plant image classification. In Proceedings of the Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2021; Volume 1873, p. 012002. [Google Scholar] [CrossRef]

- Kraus, K. Photogrammetry, 2nd ed.; DE GRUYTER: Berlin, Germany, 2007. [Google Scholar] [CrossRef]

- Elias, M.; Eltner, A.; Liebold, F.; Maas, H.G. Assessing the Influence of Temperature Changes on the Geometric Stability of Smartphone- and Raspberry Pi Cameras. Sensors 2020, 20, 643. [Google Scholar] [CrossRef] [PubMed]

- Schoenberger, J.L. COLMAP. 2023. Available online: https://colmap.github.io/index.html (accessed on 18 January 2024).

- Kim, T.K.; Kim, S.; Won, M.; Lim, J.H.; Yoon, S.; Jang, K.; Lee, K.H.; Park, Y.D.; Kim, H.S. Utilizing machine learning for detecting flowering in mid-range digital repeat photography. Ecol. Model. 2021, 440, 109419. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef]

- Wu, Z.; Chen, Y.; Zhao, B.; Kang, X.; Ding, Y. Review of weed detection methods based on computer vision. Sensors 2021, 21, 3647. [Google Scholar] [CrossRef] [PubMed]

- Gerhards, M.; Rock, G.; Schlerf, M.; Udelhoven, T. Water stress detection in potato plants using leaf temperature, emissivity, and reflectance. Int. J. Appl. Earth Obs. Geoinf. 2016, 53, 27–39. [Google Scholar] [CrossRef]

- Sobrino, J.A.; Del Frate, F.; Drusch, M.; Jiménez-Muñoz, J.C.; Manunta, P.; Regan, A. Review of thermal infrared applications and requirements for future high-resolution sensors. IEEE Trans. Geosci. Remote Sens. 2016, 54, 2963–2972. [Google Scholar] [CrossRef]

- Maas, H.G. Mehrbildtechniken in der Digitalen Photogrammetrie. Habilitation Thesis, ETH Zurich, Zürich, Switzerland, 1997. [Google Scholar] [CrossRef]

- Lin, D.; Bannehr, L.; Ulrich, C.; Maas, H.G. Evaluating thermal attribute mapping strategies for oblique airborne photogrammetric system AOS-Tx8. Remote Sens. 2019, 12, 112. [Google Scholar] [CrossRef]

| (a) Date | hcalc [cm] | href [cm] | hmean [cm] | hmedian [cm] | errabs [cm] | (b) | No. Points |

|---|---|---|---|---|---|---|---|

| 2021-11-03 | 0.00 | - | 0.00 | 0.00 | - | 1.62-2.38 | 4.1M |

| 2021-11-11 * | 3.51 | - | 0.60 | 0.44 | 2.78 | 1.67–2.48 | 5.3M |

| 2022-04-22 | 22.05 | 22 | 13.56 | 13.90 | 2.89 | 1.83–3.00 | 6.4M |

| 2022-04-26 * | 25.57 | - | 17.32 | 17.51 | 3.48 | 1.90–4.08 | 4.7M |

| 2022-04-27 | 27.69 | 26 | 20.72 | 21.13 | 3.30 | 1.72–4.69 | 4.2M |

| 2022-05-03 | 44.27 | 43 | 31.64 | 32.08 | 3.55 | 1.53–6.81 | 3.1M |

| 2022-05-11 | 67.47 | 64 | 49.67 | 50.52 | 3.29 | 1.26–7.29 | 3.6M |

| 2022-05-18 | 83.43 | 84 | 62.84 | 63.56 | 3.33 | 1.30–6.42 | 3.2M |

| 2022-05-26 | 92.65 | 90 | 70.04 | 71.47 | 3.30 | 1.29–5.95 | 2.6M |

| 2022-05-28 * | 94.18 | - | 73.35 | 74.72 | 3.24 | 1.27–5.48 | 2.9M |

| 2022-06-01 | 92.07 | 92 | 73.73 | 74.99 | 3.26 | 1.32–6.44 | 2.6M |

| 2022-06-15 | 86.75 | 87 | 74.43 | 75.55 | 2.82 | 1.30–5.67 | 3.5M |

| 2022-06-23 | 84.36 | 86 | 72.57 | 73.78 | 3.03 | 1.34–5.80 | 3.6M |

| 2022-06-28 | 85.61 | 82 | 73.06 | 73.63 | 2.88 | 1.46–6.25 | 3.9M |

| 2022-06-28 | 34.78 | - | 21.90 | 22.11 | 2.69 | 1.63–3.65 | 6.1M |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kobe, M.; Elias, M.; Merbach, I.; Schädler, M.; Bumberger, J.; Pause, M.; Mollenhauer, H. Automated Workflow for High-Resolution 4D Vegetation Monitoring Using Stereo Vision. Remote Sens. 2024, 16, 541. https://doi.org/10.3390/rs16030541

Kobe M, Elias M, Merbach I, Schädler M, Bumberger J, Pause M, Mollenhauer H. Automated Workflow for High-Resolution 4D Vegetation Monitoring Using Stereo Vision. Remote Sensing. 2024; 16(3):541. https://doi.org/10.3390/rs16030541

Chicago/Turabian StyleKobe, Martin, Melanie Elias, Ines Merbach, Martin Schädler, Jan Bumberger, Marion Pause, and Hannes Mollenhauer. 2024. "Automated Workflow for High-Resolution 4D Vegetation Monitoring Using Stereo Vision" Remote Sensing 16, no. 3: 541. https://doi.org/10.3390/rs16030541